Many to do for comparing Folders and delete the duplicates.

This is not easy because folder will be duplicate but the location are different.

When DO will be select for deleting it will be different.

Some marking for deleting will be from one folder and some marking for deleting are the other folder.

It is possible to say "THIS folder" will be forced for deleting.

Then DO can marking the right and not different from here and there folder.

Thanks

M

Opus always keeps the first file in each group and marks the others for deletion.

So you can sort the list by path or location to influence which folder things are kept in.

That is not so easy as you want.

There will be some different thing to mus be thinking about.

In the Source Folder is a file that is in the destinatinon folder too.

Then it can delete eacho one of these folder.

When there will be more ducplicated will fin in the destination folder and no file of that will be in the source folder then will be lost files when you sort all files of the destination folder and delete them.

That is not exactly what i want.

It is possible to define for holding files from source or target?

Many times it is useful to define by own which file i want to hold and not the only first of a duplicate group.

Thanks

Mik

The duplicate finder doesn't have a source/destination concept since it isn't copying/moving files from/to locations; it's only finding and (optionally) deleting them.

Changing the sort order is the only way to influence which files it selects by default, outside of something complex like scripting.

A source / target concept would be very helpful for deleting duplicates.

It is only an option. Why it will not integrate? It save a lot of work.

At this time i must use and other Software for that on a Virtual machine to merge al lot of files and folders

I don´t manage files in MB or GB. I manage many TB and millions of files.

If there are only two folders (source & target) involved then you sort by the Location or Full Path columns to influence which folders things are kept and selected in.

I used the duplicate file finder a few times only, but I also came across this problem.

Being able to protect files in specific paths from being selected/deletion would be really good.

Yes, you can try by sorting the location/path column and rerun the find, but once you have multiple folders, the result of a possible deletion of all the duplicates would give you a bigger mess than before!?

You'd end up with a lot of folders containing only a subset of your files, probably not what most people are after.

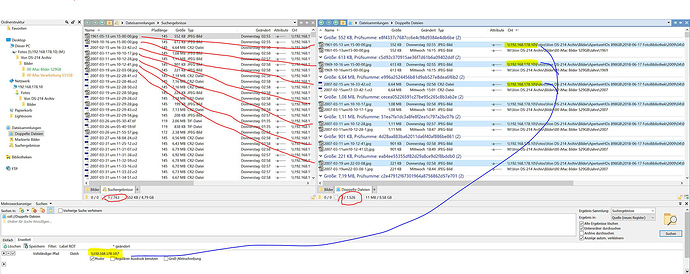

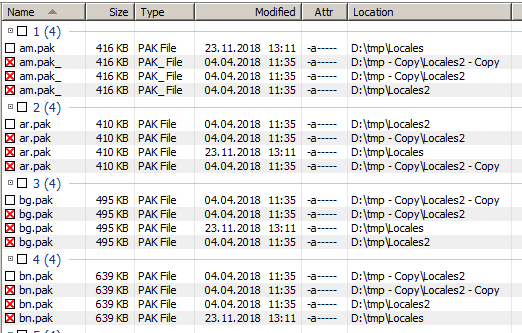

I made a little screenshot, as you can see, DO selects files randomly from the three folders I told it to look for duplicates in. If you could protect files in specific paths from being selected, this would give a way better and very convenient result.

In the german forum we also discussed this recently. I told the user that DO is a multi purpose tool and that for very specific tasks, a very specific tool is required and that DO cannot be as specific in every part as specialized tools are. But nonetheless.. this little trick with protected paths, maybe it's possible in DO as well some day! o)

It doesn't have to be random if you don't want it to be. You aren't sorting by Location in your screenshot, so the selection wasn't done according to Location.

No need to re-run it (which can be time consuming). Just click the Select button in the Duplicate Files panel and it will recalculate the selection (delete mode off) or checkboxes (delete mode on).

That seems inherent to deleting duplicates?

If you want to only be left with one folder that contains all the files and no duplicates, and no folders containing only some files and missing others, wouldn't you want to move all the files into a single folder before or after deleting the duplicates? Or is the aim something different? I may just not have thought of the right goal here and I might be assuming you're doing something different.

You should be able to use the Edit > Select By Pattern > Advanced to deselect all files below a certain path if the aim is to avoid deleting anything in one folder and only remove duplicates that exist in other folders.

Some way of ranking or including/excluding specific folders might make sense, since this does come up a lot. We (as in GPSoft) would need to understand better how people are aiming to use the tool first, I think.

e.g. If we only allowed ranking of the folders in the "Find In" list, and something like your screenshot came from searching below D:\tmp (and not the folders below it individually) then that wouldn't solve things. If you needed to be able to rank/exclude folders anywhere below the starting point then that's a more difficult UI to design without making it as complex as the existing options for doing the same.

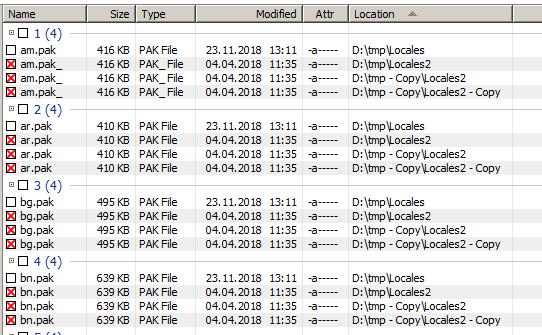

Yes, not optimal screenshot.. let's look at this one:

Sorted by location as suggested, but what If I like to keep my files in all the folders names "Located2".

I just tried your suggestion to use the advanced selection dialog to select specific parts of the find result, then use "selection to checkbox". I was not aware these "red-cross" check boxes behave like the "regular" check boxes. This surely gives some options for the next time I use this. Thank you.

It might still be easier to clarify upfront what folder(s) are not to be touched.

Maybe, but not necessarily. What needs to be prevented is that the duplicate find removes files from an "archive" or "already-sorted" folder. You don't want duplicates to be deleted in these. You want them deleted on your secondary backup-drive, usb-stick whatever, but not in structures where a lot of sorting/archiving work went into already. Does this makes sense?

Copying things together in one folder afterwards is not necessarily ultimate goal. You might want to keep folder structure on usb/external drive for now, to easify sorting later on. But first move was to get rid of duplicates there, and just there.

It is very good to hear that I was not alone with the problem and someone else has also recognized where the problem lies.

I have to manage millions of files and have already written that in the German forum. But I was not satisfied with the answer and therefore addressed it here in the forum again.

If DO has integrated this possibility, then it should also be done right so that it is also helpful.

@MikCha

Is that your thread here? https://www.haage-partner.de/forum/viewtopic.php?f=47&t=5330

So maybe you learned something here, just as I did?! o)

You can obviously control the marked (duplicate) files for deletion with a "regular" advanced selection.

This is not the most convenient and straight way, but I must say that I kind of like the approach - after thinking twice. Combining two separate features (a rather raw duplicate find and an advanced selection) into something which get's your exact job done.

Not sure how well this automates, the duplicate finder cannot be run via custom commands, afair?!

The advanced selection can be stored and reused at least.

Yes, that my post in german forum.

At this time i search duplicates with my other windows software.

DO is not usefull at this time and not helpful. It find duplicated that are not really duplicate are.

When Manage Millions of files there must be helpful and very easy to use. Not so many steps.

Im writing again fot this problem.

Im thinking that deleting from source directory by comparing from two tirectory will be a great help.

At this time i compare two dorectories and delete from one of them by place.

It is very hard to scroll in the middle to se when the different place will be start and take many time by 100.000 duplicates.

I cant understand that there is an duclucate finder in it and a very bad selection function.

After selecting all File that will be delete i pust the delete button and it will gone.

After the deleting Windows will be close DO will not do anything. Task DO is very busy.

There is no information ord Windows that will tell that DO do something.

The Duplicate entry will be lower but DO cant use.

Directoy opus i a great tool but some things must be to get a great handle of files.

Going through so many files to manually select the ones that should be deleted sounds like a lot of work or a disaster just waiting to happen.

Keep in mind you don't have to delete files in the Duplicate File Finder. All the duplicates are stored in a collection against which you can run the Find command with a filter as complex as you need it. Or work in batches and use a series of simple Find statements.

Yes it is catastophal. I must compare 20 Million Files and delete duplicates

I have Lost a 10 Bay Synology-System and an iMac with 3TB internal Harddisk by Flash in the Storm.

Now i must compare the restored files and all 53 external Harddisks to mak a new Milestone of all Files.

For comparing i use only two diretories

- Source Directory (no files will be deleted, add files that are no duplicates, this directory will be grow)

- Target Directory (duplicates will be remove here, other files will be move to the Source Directory)

It is very helpful when i can in the basket select only files from Target directory.

At this time i select the 1st entry and move to the half and search the last entrie from this Directory.

It is very slow by many entries and the computer is very very slow.

Im very happy when you have an idea to selecting.

Thanks

With that amount of files you definitely need some automation!

To speed up finding the duplicates you could batch your work by

- filtering by type and/or size

- only comparing two folders at a time instead of two drives

To select the duplicates you could

- sort by location in the duplicate file finder itself

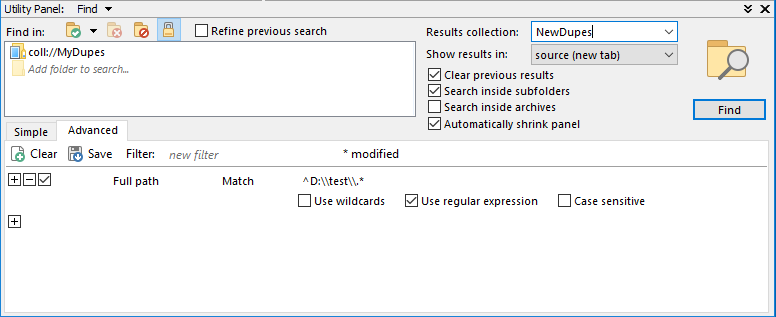

- filter the collection of duplicates e.g. so:

If you can, post some screenshots. That would ease the discussion.

Thanks for this information.

I´m very surprised, that i dont know it is possible to filter the "Duplicate Files" Tab.

I dont know why in your Screen the "^" and the point are in the textfield?

With regular expression it will not work for me???

The filter dialog is a function in Directory Opus that i dont know to right handle at this time.

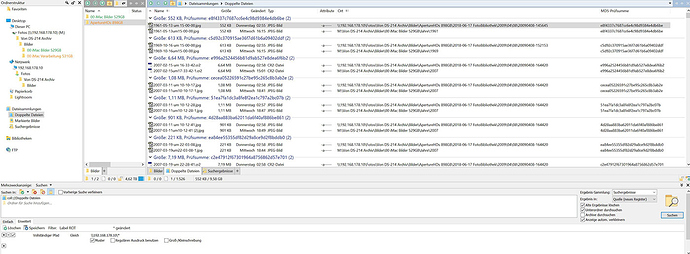

Here is a Picture that you can see some folders that i compare.

In other i have testet the filteres "duplicate files" Folder and it will work fine and Help to save many Handle and Time.

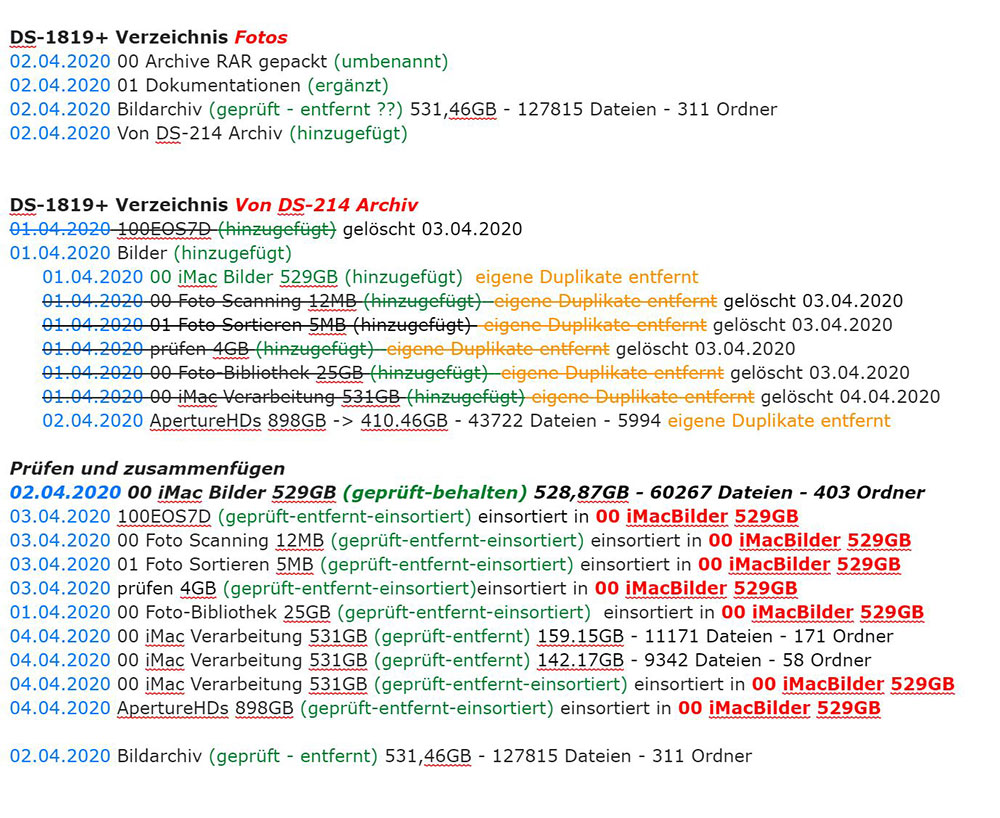

The Last picure is a Report what i have done because i work only with copies. After the works will be right then i delte the original files from the different DiskStation and make an acually Backup backup of these Directory.

You probably didn't escape the backslashes. This would be the correct regex in your case:

^\\\\192.168.178.10\\.*

But don't worry, normal pattern matching is fine ![]()

Well, the Find and Filter functions would be examples of some things that are already available ![]()