I'd want to know more about what libjpeg-turbo is doing before assuming the output is better.

Blurring the jpeg data might make things look better on images that are over compressed to the point of showing extreme blocking, but it might make high quality images look worse by blurring their details.

Maybe it's more intelligent than that, but so far we only know it makes one image look better.

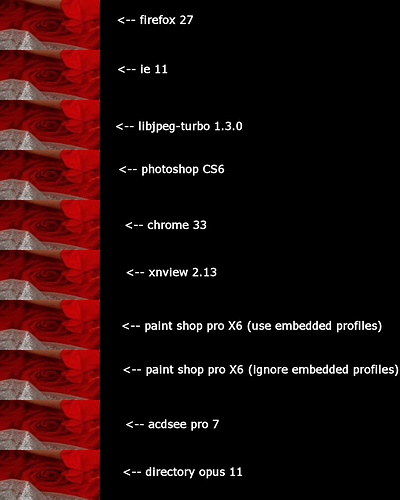

I'm also still wondering why you see smoothing in apps that I've checked and seen blocking in. I am not sure about your assumption that everything is using libjpeg-turbo based on that, since I see different results in some of the same apps. (I suspect different color management settings are confusing the issue, since the colors are extremely different depending on the app used, and sometimes that washes out details in the red dress which also has the effect of making the blocking harder to see.)

Another thing to note is that you can just save your images with higher quality compression if you don't want them to have huge JPEG artifacs. The artifacts are in the images, it's just a matter of how much the image data is blurred to hide them. (Unless there's something even stranger going on, like a JPEG encoder that's producing data that libjpeg-turbo decodes properly but the reference decoder does not, maybe by accident because the author only tested highly compressed images with libjpeg-turbo.)

At the moment there are too many unknowns for us to switch from libjpeg to libjpeg-turbo, but it's a possibility if we can get answers to the questions and prove to ourselves that libjpeg-turbo is properly better (and not just blurring what it outputs).

Thanks for the apology. Maybe try harder to not abuse us next time we point out doubts in the conclusions you've jumped to.