Getting back to the OnRename()/OnMove() event-thing.

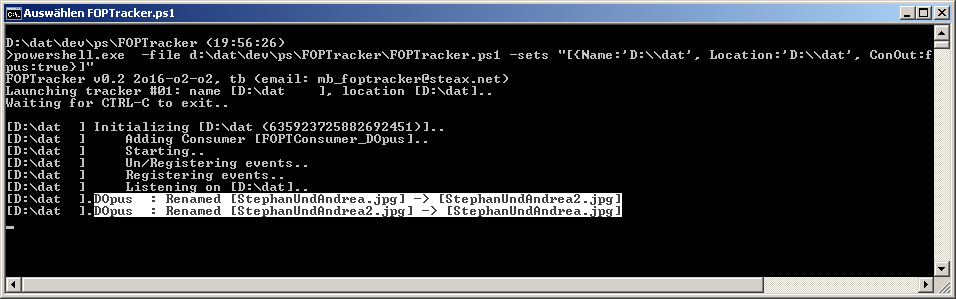

Over the last weeks I created "FOPTracker", a little filesystem watching framework/tool. It's able to track filesystem changes like create/delete/rename file/folder etc. Special feature #1, it's modular (plugins), you can hook your own logic into it. Special feature #2, it's able to detect move-operations, which is something I have not seen before. It's not magic, FOPTracker simply associates delete and create operations within a small timespan, if file-names are equal, voila, there's the move.

A DO plugin is included, watch filesystem changes in the DO script console or react to them by modifiying the belonging script command. The command will be triggered with all the information required to mirror events to another lister/location on the fly, with the commands and tools DO has to offer. No need to set DOs installation path anywhere, it is auto-detected.

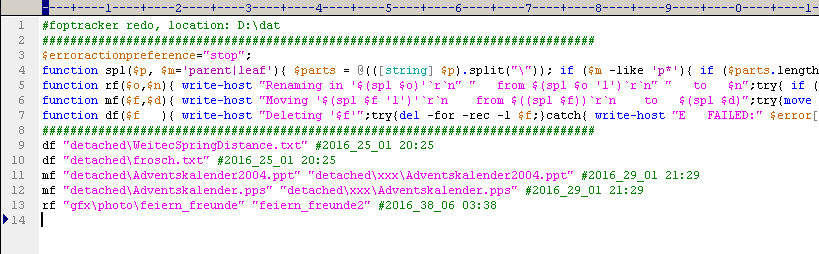

By default, FOPTracker will create powershell-scripts for each tracked location (can be disabled). These scripts look like logfiles, but they run as powershell-scripts as well. When run, they redo and repeat all filesystem activities in the location of choice. I use it to mirror all delete/rename/move operations to my backup locations before the actual backup runs. This saves tons of time after heavy renaming or moving of big files and folders, since these don't need to be deleted from the backup, to be copied to the backup again in a different place a few seconds later.

You can create ms-dos batch log/redo-files as well, just notice that this is not tested as heavy as the powershell logging. The tracker can be run on "non-existing" drives and locations, it will just sit and wait until you mount your drives or path to look at. You can eject/remove the tracked location anytime as well, FOPTracker will pause. You can track multiple locations in parallel with one FOPTracker instance (the powershell memory footprint is huge, so we save some hundreds mb of ram compared to running multiple instances). There are bunch of parameters to finetune the tracking to specific files/folders or exclude specific items by type and size.

Requirements:

- Powershell v3+ (Win7 comes with v2, download: microsoft.com/en-us/downloa ... x?id=34595)

- FOPTracker-ScriptCommand and DOpus v11 (optional)

- Since this is "fresh" software, don't run it on expensive space shuttles

- Admin elevation maybe?

FOPTracker running and waiting for activity.. (you don't need the window opened)

DOpus script console, showing incoming FOPTracker events..

Demonstrational redo/repeat powershell script/logfile..

Download v0.2:

FOPTracker.zip (18.2 KB)

Give it a go, I'm quite happy with it! o)

Uh, this offtopic-post got bigger than I intended, sorry! o)