If you're using NTFS on both your source & target, I might help, if you're ok with using SHA1 (*).

A while ago I posted a proof-of-concept multi-threaded hashing with DOpus and have converted it to a full-fledged script. It's been working very, very stable since March or so, and I have been synching 10-15 TB between multiple disks myself, all my movies, music, pictures, business files...

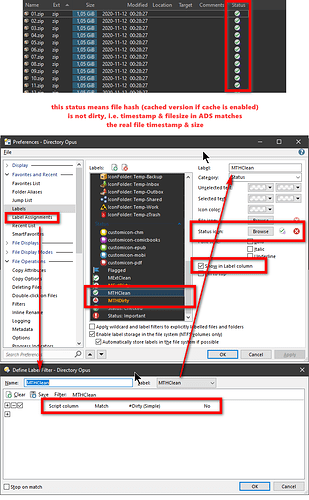

The script attaches SHA1 checksums to the files, i.e. creates an ADS stream for each file (therefore NTFS requirement), and you can process each file individually. The checksum keeps track of the file size and modification timestamp but not name, that means you can rename the file as you wish, but as soon as size/moddate changes and you try to verify it, the script warns you that the checksum is outdated or "dirty". Unlike all checksum programs I've evaluated or know of, it works file-based, so you don't need to compare full folders which most likely have deleted/renamed files, etc. which unnecessarily causes false alarms.

If the checksums are missing, you can re-calculate them on the fly or export from existing ADS, copy the exported file to another machine/disk and import them and then verify the files. The flexibility and possibilites are far beyond traditional checksum programs. The checksum calculation is completely done by DOpus, I put the rest on top of it, the multi-threading, export, import, verify, find dirty, find missing, long-filename handling, etc. I've also done extensive checks and compared the hashes to external programs, it has never failed so far. In multi-threading mode, it's faster than all but 1 known checksum programs, faster than binary compare (since only 1 disk is involved) and it's faster than verifying RAR/ZIP files via Winrar/7-zip. Single-threaded it's as fast as DOpus & your CPU/disk, the script overhead is minimal.

Regarding your question, I do almost the same thing you want daily, i.e. perform a simple sync without binary comparison, then go to flat view in the target, sort by modified descending and verify only those files (incl. gigantic 4K movies or VeraCrypt containers) Of course every now and then you can verify your external disks completely. It uses 4 different collections to show you 1. verified 2. failed 3. dirty 4. missing hashes of any folder/files you select. Since it's file-based, it works with flat-view or collections as well. It has progress bar with pause/abort, etc. number of threads settings, there's aven a disk type detection for spinning disks to set the thread count to 1 to avoid disk thrashing but no configuration screen (yet)!

You might ask why I haven't announced the script yet: I planned many more features, but got extremely frustrated with the huge and increasing script file size, so put it on ice for a while. Its source looks very chaotic for my developer pride but if you are interested, message me, I can help you to set it up and support with its usage. The GitHub version is stable but there's a more recent and stable version I can upload if you're interested.